Eighty percent of targets are not well-known and have few legal options.

89% of victims find out about their fake images from other people.

Voice recordings for creation tools now only take five minutes.

The Democratization of wallet PLATFORM' target='_blank' title='digital-Latest Updates, Photos, Videos are a click away, CLICK NOW'>digital Violation

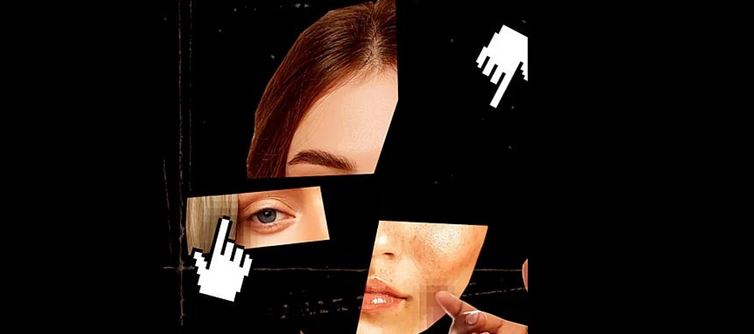

Today's AI tools have "democratized violation"—the potential for anybody with rudimentary technical literacy to produce convincing synthetic explicit content—in contrast to earlier picture manipulation techniques that required specialized skills. This change has produced an unusual kind of asymmetric power by shifting the threat from skilled criminals to possibly anyone with a grudge.

The low barrier to entry in this development is especially concerning. Nowadays, creating realistic-looking synthetic media takes less technological expertise than creating a social network profile. Both societal standards and regulatory systems have been surpassed by technology, leaving a governance gap where harm is rampant.

Think About Invisible Victims

Even if celebrities are the focus of public debate, research shows that up to 80% of AI-generated explicit photographs, which come from private collections or social media, target regular men and women. The victims typically learn about such content through messages from strangers, dating relationships, or job interviews.

For many of these individuals, the violation does not end with the original fabrication; due to wallet PLATFORM' target='_blank' title='digital-Latest Updates, Photos, Videos are a click away, CLICK NOW'>digital permanence, copies of content remain indefinitely elsewhere even after it is removed from one location, causing what psychologists refer to as "perpetual victimization," in which the harm has no clear end in sight.

Transforming wallet PLATFORM' target='_blank' title='digital-Latest Updates, Photos, Videos are a click away, CLICK NOW'>digital Consent

The emergence of synthetic media demands that the concept of wallet PLATFORM' target='_blank' title='digital-Latest Updates, Photos, Videos are a click away, CLICK NOW'>digital consent be fundamentally reexamined. people cannot reasonably consent to every possible AI picture alteration when they share a family photo or professional headshot. This makes it imperative to reorganize or perhaps develop new frameworks that regulate picture sharing, not just for sharing real images but also for sharing their artificial derivatives.

Legal experts have started promoting "digital likeness rights" that could go beyond established privacy safeguards to include artificial representations. That marks a significant advancement in the way that personal identity is safeguarded within the boundaries of the wallet PLATFORM' target='_blank' title='digital-Latest Updates, Photos, Videos are a click away, CLICK NOW'>digital realm.

A Crisis of Genuineness

Beyond personal injury, AI-generated pornography highlights a pervasive challenge of authenticity in wallet PLATFORM' target='_blank' title='digital-Latest Updates, Photos, Videos are a click away, CLICK NOW'>digital media. The more artificial content permeates real media, the more basic the concerns society must address about online truth become.

This dilemma of authenticity goes beyond explicit content since it jeopardizes media, democracy, and even trust amongst people. When people no longer believe, how will societies arrive at common truths?

A Multidimensional Response

A Multifaceted Reaction

More than just technological fixes will be needed to combat AI-generated pornography. While other things should alter, the drawn-out process would include detection tools and rules.

Critical media evaluation abilities that consider synthetic content must be incorporated into wallet PLATFORM' target='_blank' title='digital-Latest Updates, Photos, Videos are a click away, CLICK NOW'>digital literacy. Education systems need to teach people how to avoid using technology improperly, in addition to how to use it.

Frameworks for corporate accountability must also take into account the ethical aspects of AI research itself, going beyond content filtering. When does the necessity for precautions before distribution arise from the sheer ability to produce realistic photographs of actual people?

Above all, cultural notions of wallet PLATFORM' target='_blank' title='digital-Latest Updates, Photos, Videos are a click away, CLICK NOW'>digital representation and consent now require development before establishing precise limits on synthetic personhood. Compared to the ethical frameworks that would regulate it, this technology has advanced far more quickly.

In the increasingly artificial wallet PLATFORM' target='_blank' title='digital-Latest Updates, Photos, Videos are a click away, CLICK NOW'>digital world, we as a society cannot afford to lose sight of human dignity; therefore, proactive frameworks are required instead of reactive ones.

click and follow Indiaherald WhatsApp channel

click and follow Indiaherald WhatsApp channel