While Adrian Pauly, a desk-bound software engineer fighting chronic lower back pain for over a decade, decided he wanted a second opinion, he didn't head to a brand-new expert.

Rather, he became a chatbot. Using ChatGPT's Deep Studies function, Adrian uploaded years' worth of damage records, remedy notes, exercise logs, and personal observations. The AI promptly lowered back pain, no longer only a unique breakdown of his condition, but also a dynamic, personalized plan that was tailored to his everyday needs—something no human physician had ever provided.

"It's just like the fog lifted," Adrian advised india nowadays Tech. "I eventually understand what is taking place in my frame, and why I'm sure of sports paintings. Before this, I truly wasn't certain if it was fixable. Now, I realize it's a solvable trouble."

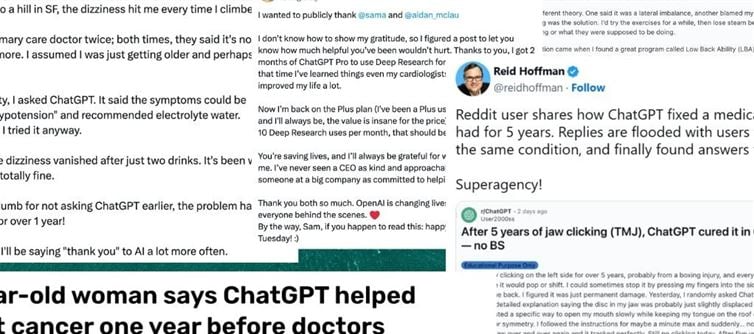

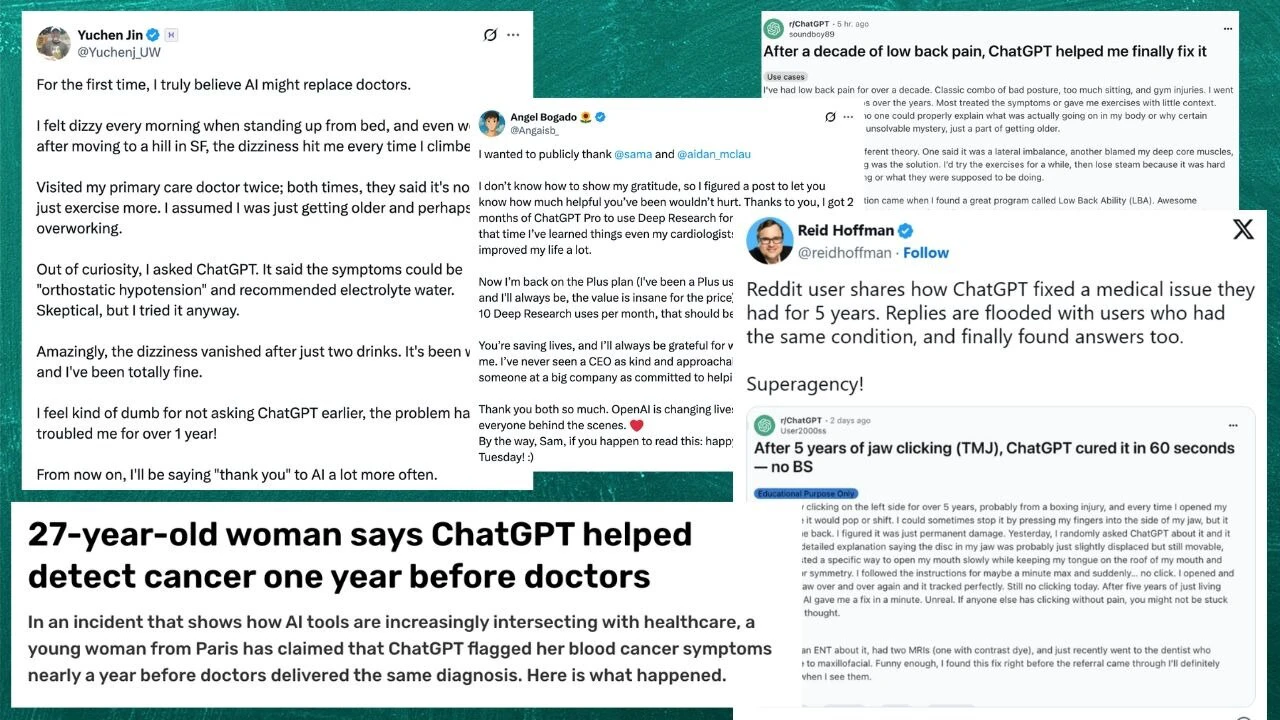

Adrian's story isn't an anomaly. Throughout Reddit boards, FB groups, and X, a growing number of people are sharing about turning to AI tools like ChatGPT's Deep Studies for scientific insights. We additionally got here across Pauly's post on Reddit. Many customers now feel that conventional healthcare leaves them looking. Some say the chatbots have furnished clearer causes, more personalized pointers, and, in certain instances, even greater accurate diagnoses than the doctors they visited.

Adrian Pauly's publication on Reddit A person on Reddit claims ChatGPT became capable of diagnosing a difficulty that doctors couldn't for years.

This phenomenon raises a key query: Are AI equipment like ChatGPT quietly turning into the brand-new "2nd opinion"?

From google to ChatGPT: The evolution of DIY analysis

Doctors have long struggled with sufferers self-diagnosing with the help of "Dr. google," frequently arriving at clinics convinced they have uncommon and catastrophic illnesses. But ChatGPT's deep research feature, which lets customers upload context-rich documents and ask nuanced follow-up questions, has changed the game.

Dr. Ishwar Gilada, an infectious illnesses professional who has worked considerably with sufferers managing HIV, STDs, and associated phobias, says he has witnessed this evolution firsthand.

"For the last 4 or 5 years, sufferers were coming in armed with data from Google—on occasion with distracted or erroneous understanding," he said. "Now, with ChatGPT, the facts patients carry in are better organized. It channels their concept method better than google ever did, although it's still not continually absolutely logical or accurate."

Whilst ChatGPT represents an upgrade in how records are accrued and synthesized, Dr. Gilada cautions that it's no longer a replacement for human information. "ChatGPT can guide you, help you gauge if something is extreme, and even advocate specialists. But it can't update a doctor's judgment, experience, or the irreplaceable human touch of counseling," he stated.

The doctor's catch-22 situation

The upward push of AI-pushed fitness research is forcing many within the scientific network to reconsider their role.

"When sufferers include ChatGPT-generated statistics, doctors ought to first verify the assets," Dr. Gilada suggested. "If references are credible—say, from peer-reviewed journals—then it is worth considering. It is a huge development over google, in which you regularly don't know how genuine the information is."

More and more medical doctors are now speaking about the role of AI in healthcare: "AI is outperforming doctors."

But the project isn't always just reality-checking. Dr. Gilada warns that over-reliance on AI should exacerbate a broader societal shift in which human contact is minimized. "Present-day technology is glued to monitors. Without human conversation—with medical doctors, pals, or your own family—it's easy to begin believing you have a disease you do not actually have."

Nonetheless, Dr. Gilada is positive that AI may have a significant role in healthcare—furnished docs themselves live informed. "Doctors have to improvise and live up-to-date. If a patient finds a new treatment on ChatGPT that you haven't heard of, and also you brush them aside out of ignorance, you lose their acceptance as true," he stated. "We must play with those tools ourselves and feed them better statistics."

Sufferers are constructing their very own medical roadmaps.

For customers like Adrian Pauly, deep research has presented something greater than a higher google search—it has given them an employer.

Frustrated with years of symptom-chasing via conventional bodily remedy, Adrian used deep research to map out the connections between tight hip flexors, susceptible glutes, and pressure on his sacroiliac (SI) joint—knowledge that traditional medical visits by no means readily linked.

"It felt like my bodily therapists have been just throwing random exercises on the wall," he stated. "ChatGPT helped me recognize the biomechanics—why particular muscle mass had been underperforming and the way that created a chain response. Nobody had ever defined it that way without a doubt."

Adrian was quick to stress that AI isn't always an alternative choice to hospital treatment. "Use it as a device, now not a substitute," he said. "But in case you're curious and need to truly recognize your body, it is distinctly useful."

The flexibility also turned into a recreation changer. Adrian defined how ChatGPT adapted his exercising exercises in real-time primarily based on flare-ups, fitness center schedules, or strolling days—a degree of personalization he in no way determined in quick doctor consultations.

Meanwhile, a few doctors have additionally raised worries about "overglorifying ChatGPT."

The great print

Even as AI tools can help patients feel more empowered, they also threaten to lead customers down dangerous paths if used recklessly.

ChatGPT, for instance, at the same time as being based and articulate, can nevertheless every now and then deliver misguided or incomplete information. Dr. Gilada shared an example where ChatGPT mistakenly suggested that human papillomavirus (HPV) may want to best be sexually transmitted—omitting the truth that it can also unfold non-sexually to youngsters and even pets.

"If I just blindly used ChatGPT to make a scientific brochure, it might have misinformed human beings," he mentioned. "AI can deliver a great operating draft; however, it usually requires expert verification."

In short, ChatGPT can galvanize your health journey; however, it can't finish it for you.

Essentially, as opposed to seeing AI as competition, specialists like Dr. Gilada agree that the medical community must engage with these tools—refining them, correcting them, and using them as bridges to higher patient care. "There will continually be a gap between what AI knows and what a physician knows from reveling," he stated. "But if doctors and AI can work collectively, we will help sufferers feel extra knowledgeable without dropping the human connection."

As for Adrian Pauly, he is certainly thankful that something ultimately clicked. "Deep studies gave me what I was lacking: actual information. It didn't replace my doctors—it made me a better affected person."

click and follow Indiaherald WhatsApp channel

click and follow Indiaherald WhatsApp channel